The last decade there have been alarmist predictions of growing data center energy consumption. These predictions did not materialize. Global data center energy consumption is hard to estimate, so decent studies are parse. A good recent bottom-up study [1] showed that between 2010 and 2018 – while data center’s compute instances increased by a factor 6, IP traffic by a factor 10 and storage capacity by a factor 25 – energy consumption of global data centers only increased by 6 percent (from 194 TWh in 2010 to 205 TWh in 2018). This is a lot. This is 1 percent of the global energy consumption.

However, it is remarkable that the increase of a data center’s resource consumption is clearly decoupled from a data center’s energy consumption. Part of the explanation is the adoption of public cloud computing. A public cloud provider runs hyperscale data centers which are much more efficient than smaller scale enterprise data centers.

Drivers for energy efficiency

In an on-premises data center, hardware is dimensioned to meet peak demands. These peak demands are often theoretical and never met. On-premises data centers can include zombie servers. Servers someone just forgot to switch off. Zombies suck energy, while doing no useful work at all.

A data center of a public cloud provider has a higher utilization rate. A public cloud provider uses a multi-tenant model, where multiple customers or tenants share the same hardware resources. As the number of tenants increase, the ratio of peak to average demand decreases. The cloud is dimensioned to meet time-coincident demand of all tenants combined. The elasticity of the cloud allows to dynamically scale up or down resources. There is no need to scale resources to meet peak demand upfront. These cloud characteristics lead to a higher utilization rate.

Server utilization

Higher equipment utilization rates mean the same amount of work can be done with fewer servers. While servers with a high utilization rate consume more electricity, this leads to less electricity consumed per useful output.

Due to a higher utilization rate, hardware in public cloud data centers have a higher hardware refresh rate. Hardware is replaced by newer more energy efficient hardware. Processor’s energy use per computation goes down. Processor’s idle power usage goes down. Watts per Terabyte storage goes down. Storage drive’s density increases.

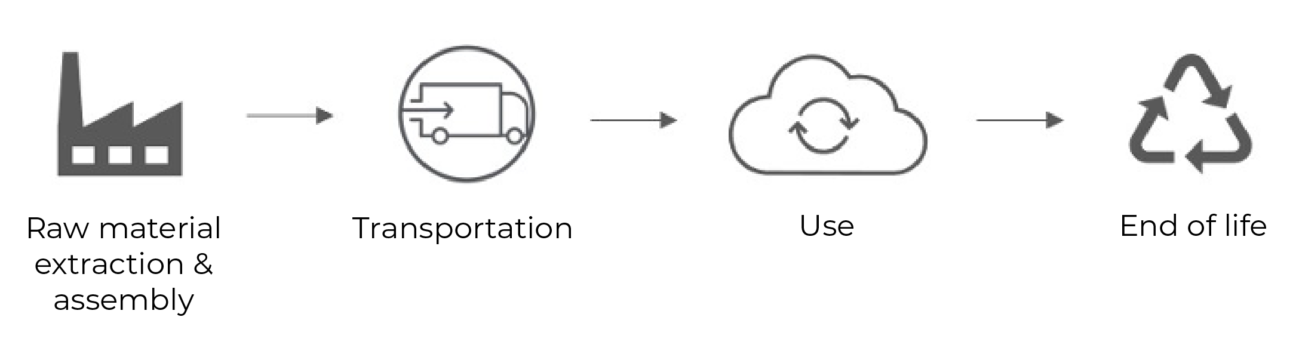

Full equipment lifecycle

When assessing energy consumption and emissions of hardware, the full lifecycle needs to be considered. While a higher hardware refresh rate has a positive impact on the use phase of the equipment lifecycle, it has a negative impact on raw material extraction and assembly, transportation, and disposal phases.

Public cloud providers have a strong incentive to tailor hardware to be as efficient as possible. Servers in public cloud data centers are bare bone. There are no blinking LEDs, because nobody is looking. The video connectors are stripped out because there is no monitor connected. Adding many of these marginal gains can lead to an energy reduction up to 10 percent.

Finally, public cloud providers have an efficient data center infrastructure. With innovative approaches, less electricity is used for cooling, backup power, lighting than in enterprise data centers. Cooling becomes less energy hungry when the data center is in an area with a cooler climate. Cooling systems are efficiently managed by artificial intelligence. Hot water-cooling systems are applied. To name just a few approaches, not directly available in a conventional data center.

Power usage effectiveness (PUE)

Power usage effectiveness is the ratio of the total electricity consumption of a data center to the electricity used to run IT hardware. A PUE of 1 would be a perfect score. A conventional data center has a PUE of 2. Hyperscale data centers often report a PUE of 1,2 or less.

Looking ahead

Continued efficiency progress is hard to predict. According to the forementioned study [1], enough efficiency can still be gained to absorb the next doubling of IT service consumption within the next three or four years. Knowing that the adoption of public cloud is still increasing, this seems a fair assumption.

On the long run, it is unlikely that the efficiency gains will keep pace with the ever-increasing demand for information services. Newer services, like artificial intelligence, are particularly computationally intensive and will drive demand even further.

Investments in renewable energy are needed to minimize the climate implication of our thirst for data. Public cloud providers like AWS and Microsoft are setting ambitious goals in this regard. Amazon is already the largest corporate purchaser of renewable energy. Amazon’s goal is to become net-zero carbon by 2040. Microsoft aims to become carbon negative. By the year 2050, Microsoft set the goal to remove all the carbon it emitted since its founding in 1975.

Carbon benefits of cloud computing

Public cloud services are more efficient than those provided in on-premises data centers. Public cloud providers are adopting renewable electricity on a massive scale. These two factors combined make public cloud carbon efficient. Microsoft cloud is between 72 and 98 percent more carbon efficient than a traditional data center [2]. AWS has up to 88% lower carbon footprint [3].

As a teenager I used to walk around in a t-shirt that read “This body is in danger”. To this day I still care. Bill and Jeff care. Do your employees, customers, partners, and shareholders care? Did your organization set ambitious climate goals? Adoption of public cloud computing can have a proven positive effect on your organization’s carbon footprint.

Further reading

- [1] Masanet, Eric, Arman Shehabi, Nuoa Lei, Sarah Smith, And Jonathan Koomey. “Recalibrating Global Data Center Energy-Use Estimates.” Science 367, No. 6481 (2020): 984-986.

- [2] The carbon benefits of cloud computing. A study on the Microsoft Cloud in partnership with WSP. Microsoft (2020).

- [3] The Carbon Reduction Opportunity of Moving to Amazon Web Services. 451 Research, commissioned by AWS (2019).