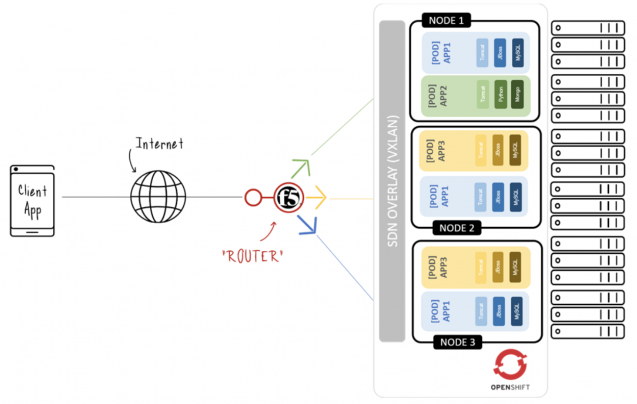

The popularity of microservice architecture has increased, and with it the popularity of containerized applications. Solutions like Red Hat OpenShift Container Platform provide a beneficial environment for managing a fleet of containers. While the platform is doing an excellent job of scaling containerized applications, the question of routing requests to those containers can still need some improvement.

In a traditional installation, the requests are routed in the SDN overlay network via an upstream proxy. However, Red Hat has developed a plug-in for supporting an F5 BIG-IP appliance as router. In the past deployment, a ramp node was required in the cluster to act as a gateway between the F5 appliance and the pods. This was necessary to enable VxLAN/VLAN bridging but could add some configuration overhead, and potentially the ramp node could become a bottleneck. Hopefully, in a newer release, support will be included to add the F5 BIG-IP appliance as a VxLAN VTEP endpoint. The F5 BIG-IP has now direct access to running pods. When a container is launched or killed, the update can go directly to the F5 BIG-IP appliance via the F5 REST API instead of going through the ramp node.

F5 Integration: F5 Router Plug-in

An existing F5 BIG-IP appliance can be integrated by using the F5 Router plug-in for OpenShift. There are two possible integrations with this appliance: using a ramp node or doing a native integration.

The F5 Router plug-in integrates with an F5 BIG-IP system (version 11.4 or higher) via the F5 iControl REST API. The F5 router supports unsecured, edge terminated, re-encryption terminated, and pass-through terminated routes matching HTTP vhost and request path.

Source: DevCentral

Using Ramp Node

The F5 BIG-IP can be integrated with the OpenShift SDN by configuring a Peer-to-Peer tunnel with a host on the SDN. This host is the ramp node, which can be configured as unschedulable for pods so that it will only act as a gateway for the F5 BIG-IP appliance. Interaction between the F5 router plug-in running on OpenShift and the F5 BIG-IP appliance is done via F5 REST API calls. When a user creates or deletes a route on the OpenShift Container Platform, the router creates (respectively deletes) a pool (if the pool does not already exist) on the F5 BIG-IP appliance. It also adds a rule to (respectively deletes a rule from) the policy of the appropriate vServer: HTTP vServer for non-TLS encrypted routes, or HTTPS vServer for edge or re-encrypted routes.

When a user creates a service on an OpenShift Container Platform, the F5 router adds a pool (if the pool does not already exist) to the F5 BIG-IP appliance. As endpoints for that service are created (respectively deleted), the router adds (respectively removes) corresponding members in the pool. When a user deletes the route and all endpoints associated with a particular pool, the router deletes the corresponding pool.

F5 Native Integration

With the F5 native integration, there is no need to configure a ramp node for F5 to be able to reach the pods on the overlay network created by the OpenShift SDN. However, the native integration is only supported for version 12.x (or higher) of F5 BIG-IP, and the SDN-services add-on license is required for the integration to work properly.

The F5 BIG-IP appliance can connect to the OpenShift Container Platform cluster via an L3 connection. On the F5 appliance, you can use multiple interfaces to manage the integration:

- Management Interface: used to reach the web console of the F5 appliance.

- External Interface: used for inbound traffic.

- Internal Interface: used to program the appliance and reach out to pods.

The F5 controller pod, i.e. the F5 router, has admin access to the appliance. When natively integrated, the F5 appliance reaches out to the pods directly using VxLAN encapsulation. The integration only works when OpenShift Container Platform is using openshif-sdn as the network plug-in, since the plug-in uses VxLAN encapsulation for the overlay network it creates.

To make a successful data path between a pod and the F5 appliance, the following five steps are important:

- F5 needs to encapsulate the VxLAN packet meant for the pods. This requires the sdn-services license add-on. A VxLAN device has to be created and the pod overlay network has to be routed through this device.

- F5 needs to know the VTEP IP address of the pod, which is the IP address of the node where the pod is located.

- F5 needs to know which source-ip to use for the overlay network when encapsulating the packets meant for the pods. This is known as the gate-way address.

- OpenShift Container Platform nodes need to know where the F5 gateway address is, i.e. the VTEP address for the return traffic. This has to be the address of the internal interface. All the cluster nodes must learn this automatically.

- Since the overlay network is multi-tenant aware, F5 must use a VxLAN ID that is representative of an admin domain, ensuring that all tenants are reachable by the F5. Ensure that F5 encapsulates all packets with a vnid of 0 (the default vnid for the admin namespace in OpenShift Container Platform) by putting an annotation on the manually created hostsubnet – pod.network.openshift.io/fixed-vnid-host: 0.

A ghost hostsubnet is manually created as part of the setup, which fulfils the third and fourth listed requirement. When the F5 controller pod is launched, this new ghost hostsubnet is provided in order for the F5 appliance to be programmed suitably.

The first requirement is fulfilled by the F5 controller pod once it is launched. The second requirement is fulfilled by the F5 controller pod as well, but it is an ongoing process. For each new node that is added to the cluster, the pod creates an entry in the VxLAN device’s VTEP FDB. The pod needs access to the nodes resource in the cluster, which you can accomplish by giving the service account appropriate privileges.